Challenge

Titans of War Ltd and Swedish studio 100 Yards needed a multiplayer backend for their competitive card game built in Unreal Engine. The requirements were strict: fully server-authoritative gameplay (no client trust), dynamic scaling to handle unpredictable player loads, and enterprise-grade cost efficiency. As a high-stakes competitive title, the architecture had to make cheating structurally impossible while keeping latency tight enough for responsive card play.

Solution

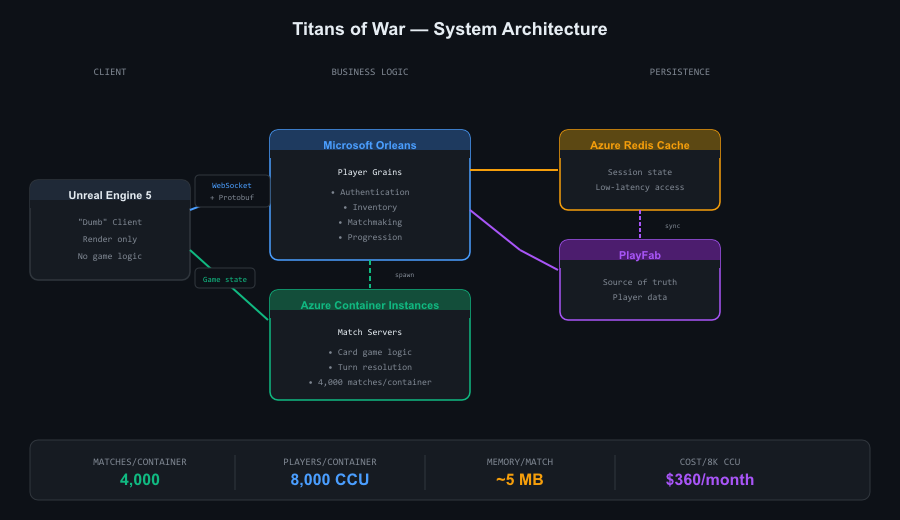

I designed and implemented a hybrid architecture using Microsoft Orleans for player-facing business logic and Azure Container Instances for match simulation.

Player layer (Orleans + Actor model): Orleans implements the Actor model, where each player is an isolated, single-threaded grain encapsulating its own state. This eliminates shared-memory concurrency issues entirely — no locks, no race conditions, no distributed deadlocks. The model maps naturally to player sessions: one grain per player handles authentication, inventory, matchmaking, and progression. PlayFab serves as the source of truth, with Azure Redis Cache as the hot-path cache layer.

Match layer (Container Instances): When a match starts, a dedicated container spins up to run the game simulation. This strict separation keeps business logic (currencies, rewards, rankings) decoupled from game logic (card rules, turn resolution, win conditions). Each D8e_v5 container handles 4,000 concurrent matches (8,000 players) at a cost of $360/month — roughly $0.045 per concurrent user.

Client communication: The Unreal client connects via WebSockets with Protocol Buffers for serialization — compact payloads, strong typing, and easy versioning. The client is intentionally "dumb": it sends player intents, receives authoritative game state, and renders. No game logic runs client-side.

Anti-cheat by architecture: Because the server calculates all outcomes, traditional cheats (memory editing, packet manipulation) are ineffective. The client literally doesn't know information it shouldn't — hidden cards stay hidden until the server reveals them.

What Made This Hard

The existing codebase was game logic only — a function-driven, synchronous system with no multiplayer infrastructure, no information hiding, and no concept of server authority. All game state was exposed to all clients, making cheating trivial.

I built the entire multiplayer and F2P infrastructure from scratch: matchmaking, player progression, inventory, session management, the full Orleans backend. The challenge was integrating this event-driven architecture with legacy game logic that was never designed for it — wrapping synchronous, stateful card resolution in a system that needed to emit events, enforce information hiding, and maintain server authority.

Card games generate dense sequences of state changes (draw, play, trigger, resolve, trigger again...), and each event potentially affects rankings, quests, and rewards. The architecture needed to:

- Wrap function-driven game logic in an event-emitting layer

- Enforce server-authoritative state with proper information hiding

- Process match events in real-time with sub-100ms latency

- Reliably propagate results to the business logic layer

- Handle disconnections and reconnections mid-match without state corruption

- Scale container instances dynamically based on matchmaking demand

The clean separation between Orleans (persistent, player-centric) and Container Instances (ephemeral, match-centric) came from this constraint: the legacy game logic lived in containers, while everything new lived in Orleans.

Results

- Status: In production

- Concurrent matches per container: 4,000 (8,000 players)

- Memory per match: ~5MB

- Infrastructure cost: $360/month per 8,000 CCU

- Cost per CCU: ~$0.045/month

- Architecture: Fully server-authoritative, structurally cheat-resistant

Executive Producers: Jens Klang