Challenge

Large-scale public gaming events are a content goldmine — but they're technically brutal. When a streamer tells 50,000 viewers to "join now," most infrastructure collapses within seconds. Player counts spike unpredictably, DDoS attacks are common, and a failed event means damaged reputation and lost content.

The goal: build infrastructure that can handle 1,000+ concurrent players in a competitive Minecraft event, scale horizontally without limits, survive viral load spikes, and deliver a fair, lag-free experience regardless of where players connect from — all while keeping costs practical for creator budgets.

Solution

I designed a globally distributed event infrastructure using Microsoft Orleans for orchestration and Hetzner dedicated servers for game execution.

DDoS protection layer (TCPShield + Cloudflare): All traffic passes through enterprise-grade DDoS mitigation before reaching any game infrastructure. L3/L4 filtering at global points of presence stops attacks at the edge, not at the server.

Proxy layer (Hetzner + Velocity): Regional proxy servers accept player connections and request assignments from Orleans. Players connect to their nearest region (NA, EU, Asia), then get routed to the appropriate game server. The proxy layer scales horizontally — spin up more instances as demand increases, no code changes required.

Game server layer (Hetzner Dedicated + Paper): Heavily optimized Paper servers handle physics, world logic, and player interactions. Each server pushes state to Orleans for global coordination. Horizontal scaling means there's no upper limit — add servers as needed, Orleans handles the orchestration.

Orchestration layer (Microsoft Orleans + Redis): Orleans manages everything: server assignments, player routing, real-time leaderboards, event state, and auto-scaling triggers. Redis provides hot-path caching for session state. A player in Europe and a player in Asia compete on the same global leaderboard with authoritative, synchronized state.

What Made This Hard

Vanilla Minecraft servers cap at ~200 players before performance degrades. Public events need 5-10x that capacity, plus resilience against bad actors who will inevitably try to ruin the stream.

The architecture had to:

- Distribute players across multiple game servers while maintaining a single competitive instance

- Synchronize game state globally with sub-second latency

- Handle connection spikes when a streamer goes live (0 to 10,000 players in minutes)

- Survive DDoS attacks without interrupting active players

- Track leaderboards and scoring across distributed servers in real-time

- Scale down gracefully when the event ends (cost efficiency)

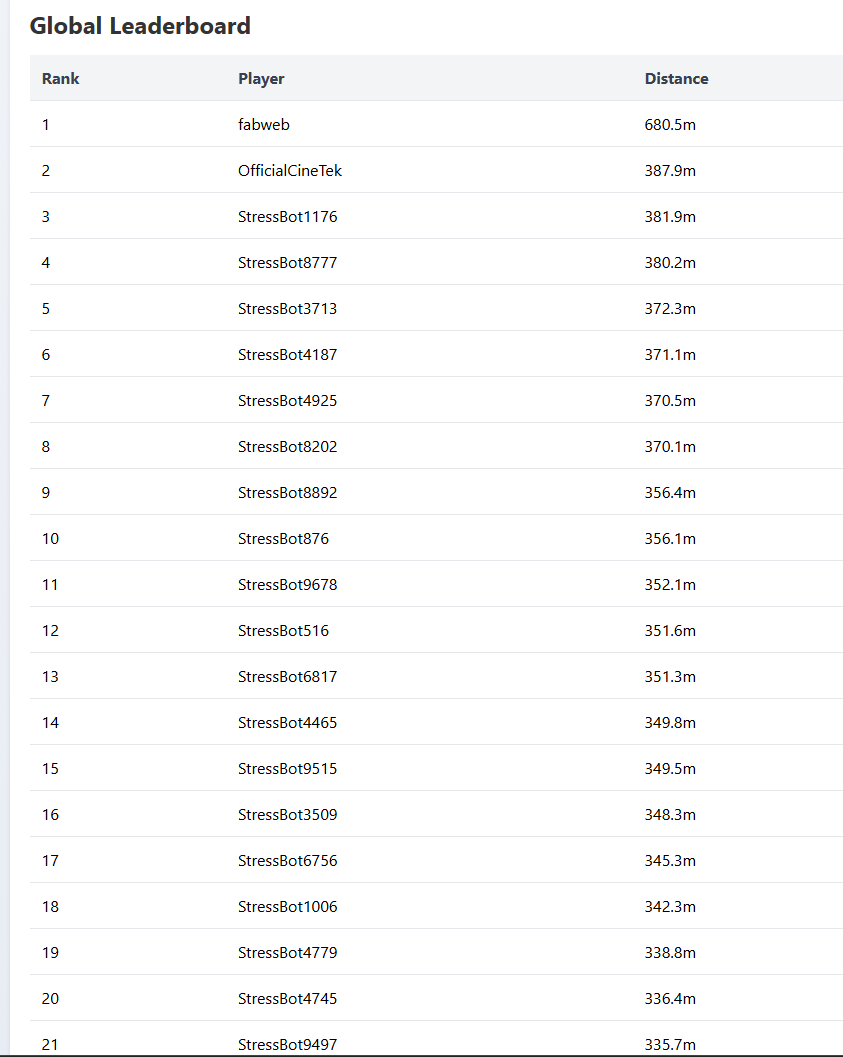

I stress-tested with 15,000 concurrent bots before any real players joined — simulating worst-case viral load, measuring failure points, and tuning until it held. The bots don't just connect; they play. They move, they compete, they stress the leaderboard system and game logic simultaneously.

Results

- Stress-tested capacity: 15,000 concurrent players

- Architecture: Horizontally scalable, no upper limit

- Global distribution: Multi-region (NA, EU, Asia) with unified game state

- DDoS protection: Enterprise-grade (TCPShield + Cloudflare)

- Leaderboard sync: Real-time, cross-server, authoritative

- Client compatibility: Vanilla Minecraft Java — no mods required for players